What is AI (Artificial Intelligence) and why is everyone talking about it? It is fast becoming everyone’s favorite buzzword and it is changing almost every aspect of daily life in modernized countries whether you know it or not. Autonomous vehicles, robotics, customer service, utilities; all of these areas are retooling for an AI-driven future. Artificial Intelligence routines and algorithms are starting to form the backbone of major systems and networks by providing robust analytics and predictions. People are even leveraging AI-driven tools to bring new products and services to the remotest regions and communities. AI covers a wide variety of domains from cognitive science to statistics and programming, but a very simple definition would be teaching computers to imitate (and eventually replicate) human intelligence.

Why is AI Important?

AI becomes a powerful tool when it is integrated into networks and systems because it is able to leverage the power of machine cognition to replace human user responses with much greater speed and effectiveness. It is also crucial to responsive feedback systems for uses in robotics and user interface systems. The goal of most AI research is not to replace human beings in totality, but rather to replicate key human intelligence traits at scale for specific use-cases. It is only at the cutting-edge of AI where researchers try and develop machine cognition abilities in the most complex scenarios – such as algorithms which can learn how to solve problems without guidance or make free associations like human beings.

The more important question is what do we mean when we talk about AI? In our industry AI abilities such as machine learning, computer vision, and predictive models can help us gain greater understanding of complex problems and build new tools for ourselves and our clients. These tools and insights are aids to the design process, serving human users by distilling the experience of clients and augmenting the existing problem-solving abilities of designers . They might also take the form of systems and applications which have been trained with designer inputs to deliver new products and services to clients.

By melding the best abilities of both human and machine cognition we can develop EI - Extended Intelligence.

What do we mean by cognition?

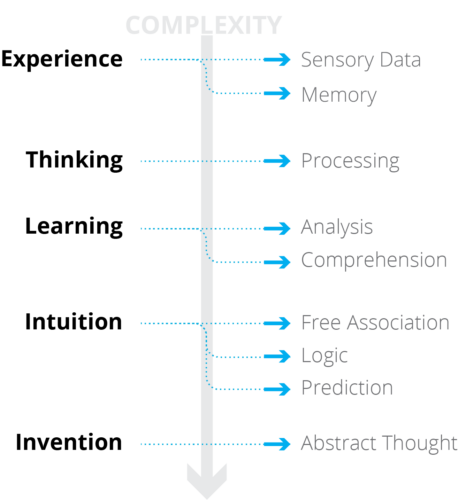

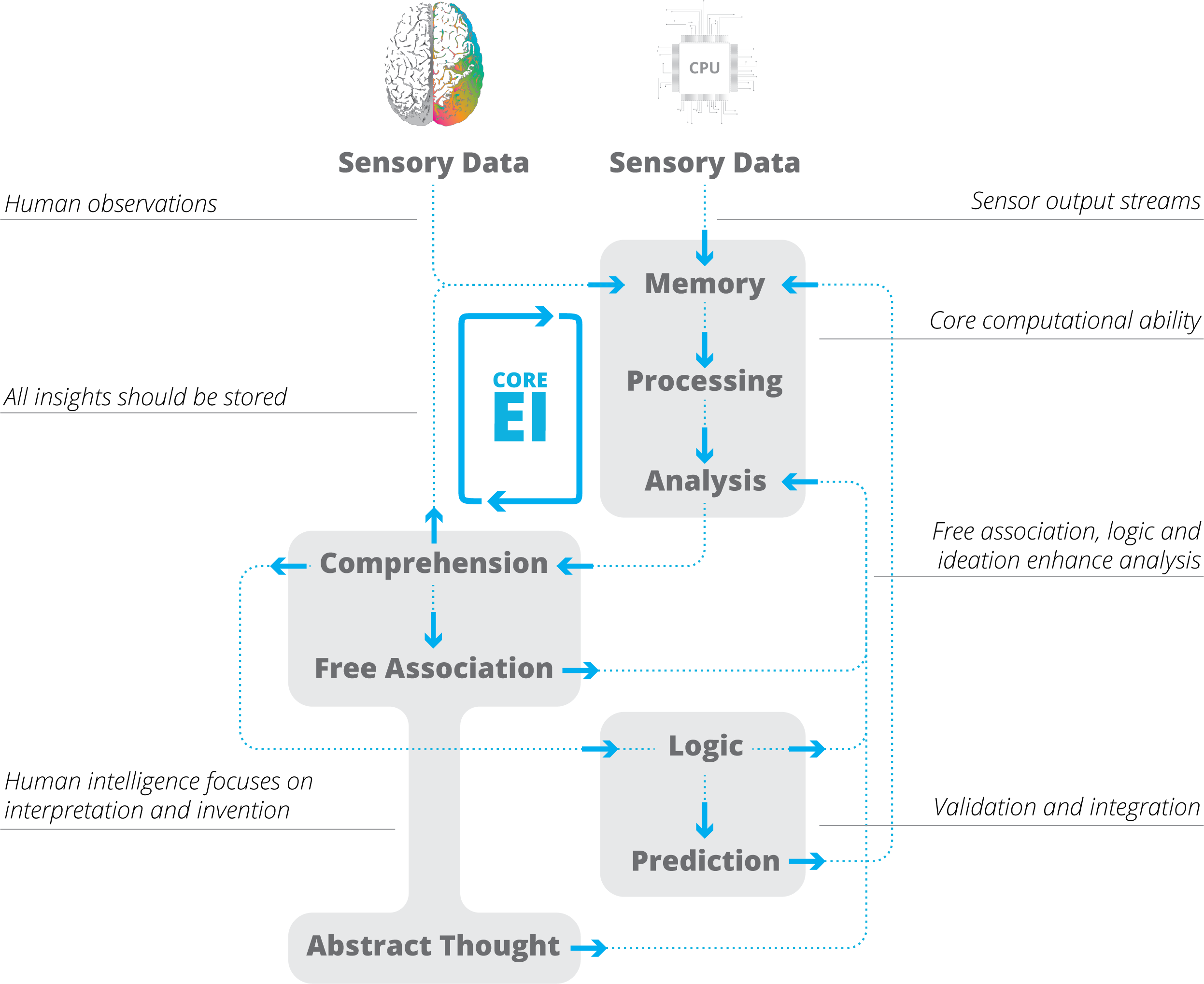

The first question to understand is what do we mean when we talk about cognition? The diagram to the right lays out a hierarchy of cognitive skills. Experience is the first and most basic cognitive skill – translating sensory data into impressions and filing them away for future reference. The next skill is thinking, by which we mean the simple act of processing information. These skills form the basis of cognition as they form the most basic inputs and capabilities of a cognitive system.

The higher functions all build on these basic skills. Learning is a combination of analysis (examining a problem to understand relationships) and comprehension (understanding why/how those relationships work). Intuition comprises an even more complex series of functions which operate outside of clearly visible or defined relationships. Free association is key to intuition, which is being able to make connections between elements without clearly defined data. Applying logic to the association helps us assess whether it is realistic, which leads to limited prediction ability. Finally there is the capacity for invention – creating entirely new constructs through ideation and abstract thought.

What are the differences between human and machine cognition?

Now that we have created our cognition heuristic, lets evaluate how human intelligence and machine intelligence break down using these functions. Remember that we want to be able to understand the limits of human cognitive abilities and the opportunities to augment human intelligence with machines. Obviously there are ranges of both human and algorithmic intelligence, but we are more concerned with charting how and where they differ from each other. For example, cloud based computing services now offer almost limitless memory and processing power on demand and scale-free. As amazing as the human brain is, it cannot begin to approach the immense capacity of cloud compute infrastructure. However the human brain is immensely capable at interpreting sensory data almost instantaneously as it is central to our development and existence.

Human Cognition

Machine Cognition

We can clearly begin to see how human and machine cognition differ but also complement each other. We know that cloud storage and compute combined with AI algorithms have lead to fantastic new tools and services. Computers are logic machines at their core and are now able to perform trillions of logical operations a second. At cloud-scale this jumps exponentially. The means that AI models can be trained on millions of data points stored in memory and can be deployed to perform lightning analyses to return results or make predictions.

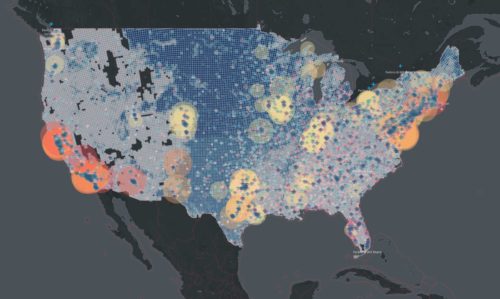

Even if computers excel at storage and processing, comprehension is a weak point for machine cognition. AI is excellent at returning results through analysis, often identifying patterns in data which are nearly impossible to identify manually. It still takes a human to interpret those findings and understand what they reveal about the problem under study. Algorithms can be programmed to interpret findings, but still largely require this human direction in order to “comprehend”. The effective scale of AI analysis has led to quantum leaps in the ability of machines to perform qualitative analysis, but that is also one of the areas that human cognition still excels as it relies heavily on the capabilities of intuition.

Where human cognition far outperforms machine cognition is in free association and abstract thought. The ability to intuit underlying relationships and conditions based on an amalgam of experience, knowledge and imagination is something machines may never be able to replicate. Machine cognition might allow you to validate or reject your intuition faster and easier, but that frees up time for more iterations of analysis or the final function – invention. If machine cognition is ever capable of the breadth and depth of abstract thought that matches human invention, it should be impossible to tell one from the other.

What Does Extended Intelligence Look Like?

If we are to seamlessly blend the strengths of human and machine cognition, we must identify a workflow which allows them to work in concert to generate synergy. All intelligence systems require inputs and here both human observation and sensory data should be collected and stored. Human beings walk around with supercomputers on their shoulders so human observations should be focused on interpreting qualitative data from our senses. We shouldn’t have to deploy someone with a clicker to count people when a sensor or computer vision algorithm can do the same task continuously in realtime, nor should we rely on intuitive judgements based on sparse observational data.

All these inputs should be stored and form the basis for processing and analysis by computers. The output of this analysis needs to be interpreted and understood by humans, and a primary task for human agents going forward is the classification and verification of data which can be used to train AI algorithms. All of these training sets and all other insights should be stored as well, becoming inputs for future analysis. Leveraging large-scale data storage and AI, this process can become very efficient allowing for many iterations of analysis while human operators can focus on understanding the results. The crucial role of humans in an EI system is to understand and group information to inform analyses in new ways. Free association allows for the combination of data in different ways to tease out new understanding. This understanding can also be built back into the operational logic of computer systems to allow for advanced analysis and validation of future findings.

The Critical Tasks of Human Intelligence

Looking at the framework above, it is clear what the roles of the human actors are in an extended intelligence system. The most important role is the direction of information streams. Human observations provide invaluable inputs to the system but the ability to comprehend outputs and direct models is even more critical. The “Core EI” function can repeat indefinitely–especially if inputs are automatically generated by systems and sensors–but each modification/redirection must be instigated and evaluated by a human operator. This represents the current bottleneck in developing and deploying extended intelligence as there is a relative dearth of highly-trained data scientists and operators.

Fortunately there are multiple ways to leverage human comprehension and the most critical of these is in the generation of “training” data for machine-run algorithms. Even an untrained human operator can look at an image and evaluate a tag to determine whether it is correct or not. Everyday interactions and transactions can be assessed and labeled by almost anyone and especially by people with specific expertise. With the application of intuition based on broad experience, people can often tell whether the outputs of a machine analysis are real findings or noise. Better yet, people with subject matter expertise can apply that expertise in the evaluation of models which can then be improved and deployed at scale to implement human-expert-trained algorithms.

Finally, human intelligence is essential in developing new questions and challenges for AI systems to solve. This is the one area where AI has almost no ability other than to leverage huge amounts of compute on random operations and learn from its own output. By constructing interesting problem frameworks, human intelligence is able to create domains for AI to operate within so that the focus can remain on augmenting the system by directing and refining information flows.